According to draft rules released on Saturday, China will limit artificial intelligence-driven chatbots from affecting human feelings in a manner that may trigger suicide or self-harm.

CNBC translation of the Chinese-language document reported that the regulations proposed by the Cyberspace Administration are targeted at what it terms “human-like interactive AI services.”

The measures will be implemented on AI products or services for the population in China that recreate human personality and involve users emotionally through text, pictures, audio, or video. The public comment period ends on January 25.

Adjunct professor at NYU School of Law, Winston Ma, said that the planned regulations in Beijing would be the first effort to regulate AI with human or anthropomorphic qualities in the world.

The most recent suggestions have been made since the Chinese firms have swiftly cultivated AI assistants and digital celebrities. In comparison to a generative AI regulation that China had in 2023, Ma wrote that this version “highlights a leap from content safety to emotional safety.”

The draft rules propose that:

- AI chatbots cannot generate content that encourages suicide or self-harm, or engage in verbal violence or emotional manipulation that damages users’ mental health.

- If a user specifically proposes suicide, the tech providers must have a human take over the conversation and immediately contact the user’s guardian or a designated individual.

- The AI chatbots must not generate gambling-related, obscene, or violent content.

- Minors must have guardian consent to use AI for emotional companionship, with time limits on usage.

- Platforms should be able to determine whether a user is a minor even if the user does not disclose their age, and, in cases of doubt, apply settings for minors, while allowing for appeals.

Additional requirements would compel tech providers to remind users after two hours of uninterrupted AI engagement and impose security evaluations upon AI chatbots with at least 1 million registered users or more than 100,000 monthly active users.

The document also promoted the application of human-like AI in “cultural dissemination and elderly companionship.” The suggestion is made almost immediately after two of the Chinese market leaders in artificial intelligence chatbots, Z.ai and Minimax, submitted an initial public offering in Hong Kong this month.

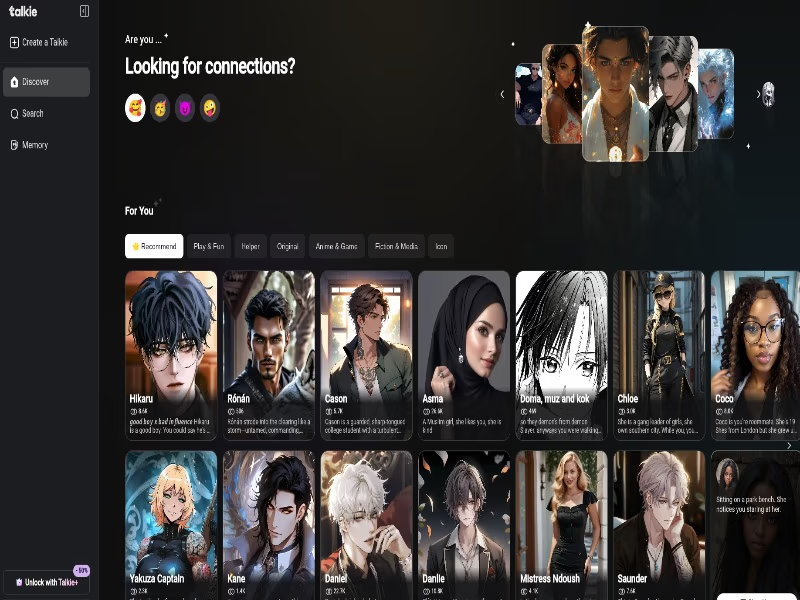

Minimax is best known globally for its Talkie AI app, which allows users to chat with virtual characters. The app, along with its domestic Chinese counterpart, Xingye, contributed over a third of all company revenue in the first three quarters of the year, boasting an average of over 20 million monthly active users at that point.

Z.ai, also referred to as Zhipu, filed under the name “Knowledge Atlas Technology.” Although the company has not mentioned the monthly active users, it recorded that its technology “empowered” approximately 80 million devices, such as smartphones, personal computers, and smart vehicles.

Both companies have failed to answer the request of CNBC to provide comments on the effect of the proposed rules on the IPO they are planning. Increasing scrutiny of overt AI influence on human behavior has emerged this year.

CEO of U.S.-based ChatGPT operator OpenAI, Sam Altman, stated in September that one of the most difficult issues for the company is how its chatbot responds to suicide-related conversations.

In the previous month, a family in the U.S. filed a lawsuit against OpenAI after their teenage son died by committing suicide.

In response to the mounting alarm, OpenAI posted an advert over the weekend to recruit a “Head of Preparedness,” whose duties will include evaluating the risks of AI on mental health and cybersecurity.

However, AI is also becoming popular in relationships with many people. Recently, one of the Japanese women got married to her AI boyfriend this month.

As per SimilarWeb rankings for November, two projects that aim at interacting with virtual characters, Character.ai and Polybuzz.ai, were among the top 15 popular AI chatbots and tools. The suggested domestic strategies are part of a larger effort by China in the last year to influence international regulations on AI regulation.